IS NeRF THE FUTURE OF 3D SCANNING?

Neural Radiance Fields (NeRF) could be the breakthrough technology that transforms 3D scanning. This article explores how NeRF may lead to faster, cleaner, and more accessible 3D scanning solutions in the near future.

Introduction

As a 3D scanning software company, we’re obliged and excited to hypothesise about where this technology sector is headed.

What’s the ultimate 3D scanning tech going to look like a few years from now? When and will 3D scanners be able to produce clean results that don’t require any mesh cleanup?

Will this be as widely accessible as a smartphone face cam? Or will it be a state-of-the-art, completely wireless device costing thousands of dollars?

Surely, there’ll be a mind-blowing 3D scanning technology that’ll deliver hyper-realistic 3D results in minutes or seconds, right?

Well, hang on tight, because we might just be getting propelled into that future much sooner than expected. Not so long ago back in 2020, a handful of incredibly smart researchers explored the concept of “NeRF”, short for Neural Radiance Fields. While it still presents some obstacles, the way it works and the results it produces are simply mind-blowing.

A NeRF rendered 3D scene generated by KIRI's NeRF algorithm

Tech-driven early adopters like ourselves are already super hyped about this NeRF and believe that, once harnessed, it’ll take 3D scanning use cases to a-no-ther level. This is BIG, it’s a breakthrough (for real).

NeRF has the potential to literally change our lives, personal and professional. Imagine a sort of Google Earth for everything, and what you could do with this tech at your fingertips.

Need to see it to believe it? So did we. Let’s go.

But first let’s take a quick, simplified look at the main existing 3D scanning technologies so you can understand why we’re so excited to be working on NeRF!

Current 3D scanning methods and their limitations

The three main 3D scanning technologies used by 3D scanner manufacturers and software developers are structured light, laser triangulation, and photogrammetry. There are a few other, less commonly seen technologies but we’ll focus on these three to cover just the basics.

Generalities

As a general informative note, 3D scanner prices can range anywhere from US$500 for the cheapest solution to well over US$100,000 for the most advanced, industrial solutions.

To give you an idea, a good, basic professional 3D scanning setup– including hardware, 3D editing software, accessories, and a monster-power PC, will generally cost upward of US$5,000. If you’re in digital dentistry or quality inspection, you can double that, at the very least.

There are so many price ranges, depending on so many factors, and therefore many exceptions to the following generalities. Our goal here is just to provide a quick overview.

Structured light

Structured light 3D scanners are by far the most common types of 3D scanners on the market today. These solutions come in all shapes and sizes:

A collection of different kinds of Structured-light 3D scanners (Image source: All3DP, " The Best 3D Scanners of 2022 " ,https://all3dp.com/1/best-3d-scanner-diy-handheld-app-software/)

A collection of different kinds of Structured-light 3D scanners (Image source: All3DP, " The Best 3D Scanners of 2022 " ,https://all3dp.com/1/best-3d-scanner-diy-handheld-app-software/)

They work by projecting, via a light projector (like a video projector at the movies), a series of light patterns onto the object to 3D scan. For example, the pattern can be a simple series of stripes; when the stripes hit the object, they each deform differently based on the object’s shapes.

Light pattern of a structured-light 3D scanner (Image source: Alexandria P., 3D Natives, " 3D scanning through structured light projection " , https://www.3dnatives.com/en/structured-light-projection-3d-scanning/)

Light pattern of a structured-light 3D scanner (Image source: Alexandria P., 3D Natives, " 3D scanning through structured light projection " , https://www.3dnatives.com/en/structured-light-projection-3d-scanning/)

The software then calculates the object’s 3D shape based on how much the stripes were deformed here and there. It quickly creates a series of 3D coordinate points, each with an x, y, and z.

Since regular light projectors can come at a very low price, it means the structured light 3D scanners that use them can be relatively cheap to produce (compared to laser scanners, for example).

That’s why you can see low-cost options starting at around US$500. But there are also industrial-grade structured light 3D scanners with more expensive, high-precision optical components and general hardware/software.

On its own, structured light technology cannot generate texture maps (colors), but most structured light 3D scanners will feature a built-in RGB camera to do that.

Pros:

- Speed

- Precision

- Real-time 3D model preview

Cons:

- Price

- Challenged by dark-coloured surfaces (darkness absorbs light, throws off measurements)

- Challenged by shiny, reflective, and/or transparent surfaces (light is reflected, throws off measurements; requires mattifying spray or powder)

- Sensitive to broad daylight or strong lighting

Laser triangulation

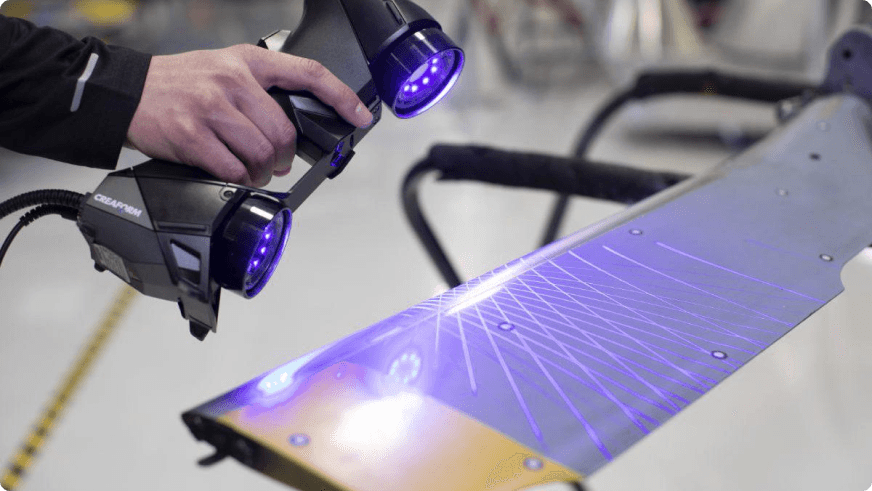

There are numerous laser-based 3D scanning technologies, including ToF (time-of-flight, like LiDAR) and phase shifting. But the most common one, which we can see in most of today’s professional handheld 3D scanners, is laser triangulation.

A laser triangulation based 3D scanner (Image source: Aniwaa, " Handheld 3D scanning " , https://www.aniwaa.com/topic/handheld-3d-scanners/)

A laser triangulation based 3D scanner (Image source: Aniwaa, " Handheld 3D scanning " , https://www.aniwaa.com/topic/handheld-3d-scanners/)

The 3D scanner projects laser lines onto the object and uses trigonometric triangulation to calculate its shape, based on how the laser lines bounce off of the object and back into the scanner’s sensors.

Laser triangulation 3D scanning is generally very precise and accurate. But, as usual, this will depend on your budget. Most professional handheld options cost between US$20,000 and US$50,000.

Like structured light, laser 3D scanning on its own cannot generate texture maps. These 3D scanners are used in applications where texture is rarely required anyway (reverse engineering, quality inspection, etc.).

Some options feature built-in photogrammetry modules, but they’re often just to create the “skeleton” of the model thanks to target markers. Markers are small, black and white stickers you apply to parts. They help the 3D scanner, which recognizes the stickers and knows their exact size, achieve more accurate and precise results.

While markers are not always mandatory, they’re used more often than not. They can be a pain to stick and unstick, especially on fragile surfaces. There are sometimes alternatives like magnetic markers, marker-filled nets, scale bars, and so on.

Pros:

- Price

- Often requires markers

- Challenged by dark-coloured surfaces (darkness absorbs light, throws off measurements)

Cons:

- Price

- Often requires markers

- Challenged by dark-coloured surfaces (darkness absorbs light, throws off measurements)

- Challenged by shiny, reflective, and/or transparent surfaces (lasers are reflected, throws off measurements; requires mattifying spray or powder)

Photogrammetry

Photogrammetry is the practice of measuring something based on photos of that thing. You import photos of the object (covering it in 360°) into special software. Each photo must overlap with another, so the algorithm can identify identical features from one photo to the other.

KIRI Engine app that uses photogrammetry for 3D scanning (Image source: Matthew Mensley, All3DP, " Photogrammetry on Android Is Surprisingly Good " ,https://all3dp.com/4/kiri-engine-android-3d-scanning-app-photogrammetry-hands-ontitle/)

KIRI Engine app that uses photogrammetry for 3D scanning (Image source: Matthew Mensley, All3DP, " Photogrammetry on Android Is Surprisingly Good " ,https://all3dp.com/4/kiri-engine-android-3d-scanning-app-photogrammetry-hands-ontitle/)

Since the algorithm looks for identical features between each photo, this means that the object or person being scanned must be perfectly still. If the object is even slightly moved, the algorithm won’t understand, since it referenced the object at X distance from X feature. Like the other technologies listed above, it won’t do well with dark, shiny, or transparent surfaces either.

Photogrammetry offers excellent textures and colors, though it won’t always be the right choice if you need accurate 3D mesh and measurements. It cannot determine the object’s size and scale the model accordingly; you must do this manually.

Anyhow, aside from a camera and a PC that’s powerful enough to run the CPU-intensive software, all you need is the software. Professional photogrammetry software can cost several hundred USD per month, or several thousand as a one-time license fee. Numerous free options also exist, but either way, in most cases, you will need a gaming-grade computer to process your scans.

The most cost-effective option is smartphone photogrammetry. You use the hardware you already have, ie., your smartphone. And all the processing happens on the cloud, so you can bypass the need for a powerful PC. 3D scanning apps either charge monthly subscriptions or charge for each export or certain export formats.

What you can do for free with KIRI Engine’s photogrammetry app? A lot. Check out our Basic vs Pro pricing breakdown.

Pros:

- Ultra-realistic textures and colors

- Accessible / relatively easy to use

- Price (compared to other tech)

Cons:

- Less accurate 3D meshes

- Challenged by dark-coloured surfaces (hard to distinguish shadows from dark surfaces)

- Challenged by shiny, reflective, and/or transparent surfaces (think of a mirror, the reflection changes depending on where you’re coming from; photogrammetry cannot understand this)

- Challenged by objects that are too simple (not enough features for the algorithm to compare between photos)

- No real-time preview

Challenging surfaces, a common denominator

So now we’ve gone over the main 3D scanning technologies and how they work. We saw that they all come at different prices and with varying output quality.

Amidst this diversity, we can still identify one main common denominator: all these technologies have trouble with the same kinds of surfaces:

- Dark-colored surfaces

- Shiny or reflective surfaces (chromy metals, glossy finishes, …)

- Transparency or translucency (windows, glass, …)

You can work around them by adjusting settings like exposure and contrast, and using accessories like mattifying spray or talc. But the difficulty (and sometimes impossibility) to scan certain surfaces is there nonetheless, whether you’re using state-of-the-art photogrammetry algorithms or a good old laser 3D scanner.

If you take a look around you, you’ll be surprised and perhaps disappointed to realize that most objects are hard to scan. Once you take each tech’s pros and cons (markers, lighting, …), and apply this second layer of “challenging surfaces” that apply to all of these techs, there’s not that much you can easily scan without extra effort or head scratching.

In light of these challenges, NeRF might just be the mind-whopping solution the industry has been waiting for so long. NeRF-based technology can tackle ALMOST any object, no matter its surface type and shape.

That said, NeRF still has significant drawbacks that must be solved before it can become the ultimate 3D scanning tech alternative. Let’s take a look!

In comes NeRF 3D scanning

NeRF stands for Neural Radiance Field. The way it works behind the scenes can be a bit complex to grasp, so we’ll try to keep it super simple. If you need more in-depth, technical explanations, we included a few resources at the end of this article.

Just like traditional photogrammetry, NeRF processes photo sets taken from many different angles around the object. But while photogrammetry identifies identical features in each overlapping photo of an object, NeRF uses Multilayer Perceptron (Or Neural Network, in English) to predict what an object would look like at any position and direction using the given overlapping photos of an object.

Then, it attributes color and opacity data (like RGBA or Hexadecimal color codes) to each “pixel”, and to each viewing angle of each pixel. That’s the simple version!

| Technology | Input | Processing Method | Output |

|---|---|---|---|

| Photogrammetry | Photos are taken all around the object from different angles. | Correlates the feature points of the object from adjacent / overlapping photos, then uses trigonometry to construct the point clouds of those feature points. | A 3D model including mesh and texture maps. |

| NeRF | Photos are taken all around the object from different angles. | Uses " neural network magic " to create a complete scene at every position using a given photo set taken from many angles and heights. | A 3D scene that can be viewed from any view position (NOT a 3D model with mesh and texture maps). |

What does this mean?

All the things, all the wow. Incredible possibilities.

Transparency and lighting are no longer an issue. NeRF will take transparency into account and be able to generate realistic lighting and shadows, even through windows. You’ll be able to render a scene and explore it as if you were there.

Since transparency and lighting are easy peasy now, so are reflective and shiny surfaces. NeRF knows what shine is and will represent it as you move through scenes or around your object.

Visually, NeRF is astonishing. It’s like jumping inside a video, a video that you can control. And you can obtain these results in minutes, without manually 3D modeling everything one by one or post-processing the scan.

There is so much potential for this technology in any and all use cases: special effects, virtual and augmented reality (for social, for education, for entertainment, …), cultural heritage preservation, e-commerce, tourism, real estate, and SO much more.

Our first successful NeRF render. After the first working algorithm, we just got better and better results.

But the one main drawback with Neural Radiance Fields is its current output format. While NeRF has the advantage of being able to scan almost anything, it cannot yet export the data as the kind of standard mesh you’d find with OBJ, FBX, or USDZ.

This means you have something to look at, but you cannot use the 3D data to create standalone 3D models for reverse engineering, 3D printing, and so on.

Many research groups, including ourselves at KIRI, are testing different ways to make 3D meshing possible with NeRF. Solving this is a tricky endeavor but we’re making great progress and can’t wait to release our NeRFed version of KIRI Engine next year!

Conclusion: Is NeRF the future of 3D scanning?

NeRF isn’t yet ready to replace each and every technology that’s already out there, depending on the use case and budget. Whether it will depend on how well the kinks of NeRF meshes are worked out. If you can get a 3D model of an object with NeRF and it’s accurate down to the micron, what’ll stop NeRF from replacing 3D scanning in general use cases?

Thus, we believe that once NeRF successfully combines the best of all 3D scanning worlds– photorealistic colors and accurate 3D meshes– it will change if not become the future of 3D scanning.

Again, our team is super excited to be pioneering this front, alongside inspiring research teams and companies. As we have done with photogrammetry, we aim to make NeRF technology easily accessible via our apps on Android, iOS and web browsers.

Stay tuned for KIRI EngineNeRFed !