2D Image to 3D Model

This article provides a comprehensive overview of the principles and methodologies behind converting 2D images into 3D models.

The transformation of 2D images to 3D models is no longer confined to high-end studios or specialized hardware. Today, AI algorithms are decoding depth from subtle gradients in shadows, reconstructing textures from fragmented pixels, and crystallizing abstract light particles into tangible forms—demoratizing digital creation like never before. This revolution is not merely technical; it’s a paradigm shift in how humans interact with virtual and augmented realities, blurring the lines between physical and digital worlds.

This article will provide a detailed introduction to the differences and characteristics of AI-generated modeling and scan-based modeling, and how to put each type of workflow to practice.

2D image to 3D model: AI Generative Modeling vs Scanning-Based Modeling

The conversion of 2D images to 3D models represents a significant advancement in digital representation, with two primary approaches emerging: AI-Generative Modeling and Scanning-Based Modeling. Each method offers distinct advantages and limitations, making them suitable for different applications.

AI Generative Modeling

AI Generative Modeling for converting 2D images to 3D models has gained significant attention in recent years due to its ability to generate high-quality 3D representations from 2D inputs. This technology utilizes deep learning techniques and generative models to infer depth, structure, and texture from a single 2D image, transforming it into a 3D object that can be manipulated in virtual spaces. Here’s a detailed breakdown of the principles, features, and limitations of the AI generative models used in 2D-to-3D conversion, along with their real-world applications.

A common tool for generative 3D modeling is Meshy AI. Meshy AI revolutionizes 3D content creation by transforming text prompts or 2D images into high-fidelity 3D models within seconds, eliminating technical barriers for creators across industries like gaming and product design.

Principles of AI Generative Modeling for 2D-to-3D Conversion

Depth Estimation and Reconstruction

- AI models learn how to estimate depth and 3D structure from 2D images. Convolutional Neural Networks (CNNs) are commonly used to extract features from the 2D image, while techniques like Stereo Vision or Monocular Depth Estimation predict the depth of various parts of the scene.

- Neural Radiance Field (NeRF) and Multi-View Stereo (MVS) are often employed for more complex reconstructions by leveraging multiple images from different angles or views of the object to create a detailed 3D model.

Semantic Understanding

- AI-based generative models don't just capture the geometric features of an object but also its semantic attributes, such as texture, material, and lighting. This enables more realistic rendering in the final 3D model.

- Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) can be trained to generate 3D meshes, ensuring that the model can capture complex shapes and textures from a 2D input.

Generative Networks

- Generative Adversarial Networks (GANs): In 2D-to-3D conversion, GANs are used to generate 3D shapes from 2D images by training two networks—one that generates the 3D model (the Generator) and one that tries to discriminate between real and generated models (the Discriminator). The adversarial training process helps refine the accuracy and realism of the generated 3D models.

- Variational Autoencoders (VAEs): VAEs are used to generate 3D models by encoding the 2D image into a latent space and then decoding it into a 3D structure, maintaining consistency in texture and geometry.

3D Mesh Generation

- The AI-3D generation typically produces 3D Mesh outputs, which are a collection of vertices, edges, and faces that define the shapes of 3D objects. The network learns the shape of the object by identifying key features in the 2D image and predicting their 3D coordinates.

- Point Clouds or Voxel Grids may also be used for creating 3D models, which can later be converted into meshes for further manipulation.

Limitations of AI Generative Modeling for 2D-to-3D Conversion

Loss of Detail and Accuracy

- While AI can generate plausible 3D models from 2D images, there may be a loss of fine details, particularly when the input image lacks depth information or contains occlusions. The generated model may not perfectly capture intricate structures or subtle textures.

Dependency on Training Data

- The performance of AI models heavily relies on the quality and quantity of the training data. Models trained on limited or biased datasets might generate inaccurate or unrealistic results. For example, if the model is trained on a small set of objects, it might struggle to generate accurate 3D models for new or unseen objects.

Handling Complex Scenes

- Generating 3D models from complex scenes with multiple overlapping objects or cluttered environments remains a challenge. AI models often struggle to correctly infer depth and structure in such scenarios, leading to distorted or incomplete 3D reconstructions.

Lack of Real-Time Processing

- While AI models can generate 3D models efficiently, the computational requirements are still high, often taking hours on high-end processing hardware to produce a single output. Real-time 2D-to-3D conversion remains a challenge for applications like live streaming, gaming, or AR/VR, where real-time generation and feedback are crucial.

Generalization to Different Object Types

- AI generative models trained on specific object categories (e.g., human faces, cars, or furniture) might not generalize well to other object types. This limits their application unless the training dataset is highly diverse and comprehensive.

Applications of AI Generative Modeling in 2D-to-3D Conversion

Gaming and Virtual Reality (VR)

- AI generative models are extensively used in game development to create 3D models of characters, environments, and assets from concept art or sketches. These tools can also be applied to generate dynamic levels or environments in VR Experiences, making the creation of immersive worlds faster and more cost-effective.

E-Commerce

- In e-commerce, AI can convert product images into 3D models for Virtual Try-Ons or 3D Product Visualization. This helps customers interact with products by rotating or viewing them from different angles, improving the online shopping experience and potentially boosting conversion rates.

Cultural Heritage Preservation

- AI generative models are being used to Digitally Preserve Historical Artifacts and Archaeological Sites. By converting 2D photographs of ancient sculptures, ruins, or manuscripts into 3D models, researchers and museums can digitally store and restore cultural heritage, making it more accessible to the public and future generations. However, since artifacts are rarely a thoroughly trained subject by AI, algorithms tend to have a hard time analyzing their complete shapes.

Healthcare

- In Medical Imaging, AI can convert 2D scans (such as CT or MRI images) into 3D models for Pre-Surgical Planning, Custom Prosthetics Design, and Medical Education. These models provide a more detailed and accurate representation of a patient’s anatomy, aiding in better diagnosis and treatment.

Interior Design and Architecture

- AI-powered tools can convert floor plans or 2D architectural sketches into 3D models of buildings, interiors, or urban landscapes. This simplifies the process of designing spaces and allows for easy Virtual Walkthroughs of buildings before construction begins, offering better visualization for clients and architects.

Film and Animation

- In film production and animation, AI is used to convert 2D concept art and storyboard images into 3D models that serve as the foundation for creating Animated Characters, Virtual Sets, and Visual Effects (VFX). This significantly reduces the time and effort required during the pre-production stages.

Scanning-Based Modeling

Scanning-Based Modeling is another critical method for converting 2D images into 3D models. This approach relies on scanning real-world objects or environments using specialized hardware (e.g., depth sensors) or software (e.g., 3D Scanning Software) to capture the geometry and texture of a scene. While it offers compelling advantages, it also comes with specific limitations. Below is a detailed breakdown of the principles, features, limitations, and applications of scanning-based modeling.

Principles of Scanning-Based Modeling

3D Scanning Technology

- Photogrammetry: This method involves capturing multiple 2D images from different angles around an object or scene. Software then processes these images to reconstruct a 3D model by detecting common features in the images and triangulating the position of points in 3D space.

- Laser Scanning (LiDAR): LiDAR (Light Detection and Ranging) uses laser pulses to measure distances between the sensor and surfaces. It collects dense point clouds that represent the 3D structure of objects or environments. This method is commonly used in industrial, architectural, and geographical scanning.

- Structured Light Scanning: This involves projecting a series of light patterns onto an object. The way the patterns deform on the surface of the object is captured by cameras, and from this, a 3D model is reconstructed.

Point Cloud Generation

- The primary output of scanning-based modeling is the Point Cloud, which represents the object or scene as a collection of 3D points, each having an XYZ coordinate in 3D space. These points are then processed into a Mesh that can be textured and manipulated further to create a complete 3D model.

Mesh Construction

- After the point cloud is generated, it needs to be converted into a Polygonal Mesh. This is typically done through techniques such as Delaunay Triangulation or Poisson Surface Reconstruction, which connect the points to form a surface. Once the mesh is created, the model can be refined, smoothed, and textured.

Features of Scanning-Based Modeling

High Accuracy

- One of the key advantages of scanning-based modeling is its accuracy. 3D scanning technologies, especially LiDAR and high-resolution photogrammetry, can produce highly detailed and precise models, making them suitable for applications that require high precision, such as heritage preservation, architecture, and engineering.

Real-World Representation

- Unlike AI-driven generative methods, scanning-based modeling captures the Actual Geometry of real-world objects or environments. This ensures a high level of realism and accuracy in the generated 3D model, making it ideal for applications that require a precise representation of existing physical objects or scenes.

Texture and Detail Preservation

- Scanning methods, especially photogrammetry, can capture not only the geometry but also the textures of objects. This allows for the creation of realistic 3D models with High-Quality Textures, preserving the visual details of the scanned object or scene. The texture mapping process is often done by taking high-resolution photographs and applying them to the generated 3D mesh.

Versatility in Input Data

- Scanning-based models can be created from a variety of input data, such as 2D photos, video-frame extractions, and depth maps. This versatility allows users to choose the most appropriate method based on the available resources and desired output.

Automatic Workflow

- Once the 3D data has been captured, the process of converting point clouds into meshes and applying textures can often be automated. This reduces the manual effort required to produce accurate 3D models and accelerates the workflow.

Limitations of Scanning-Based Modeling

High Cost and Equipment Requirements

- High-precision 3D scanning equipment, such as LiDAR scanners or structured light devices, can be expensive. While photogrammetry can be done with regular cameras even on smartphones, achieving high accuracy still requires a high number of quality images and powerful processing hardware.

- In addition to the hardware, the software needed to process the captured data and convert it into 3D models can also be costly, making scanning-based modeling less accessible for casual or small-scale users.

Data Processing and Complexity

- The point cloud data generated from scanning can be extremely dense and complex. Processing large point clouds to create clean, usable meshes can require significant computational resources and time, especially for highly detailed scans.

- Complex scenes with many overlapping objects or intricate details can lead to messy point clouds that are difficult to process, requiring extensive manual cleanup and refinement.

Limitations in Accuracy in Certain Environments

- Scanning-based modeling can struggle in certain environments. For example, Shiny or Transparent surfaces, like glass or water, can reflect light in ways that make accurate data collection challenging for LiDAR or photogrammetry methods.

- Indoor Spaces with poor lighting or cluttered environments can result in incomplete or inaccurate scans, especially if the scanning equipment fails to capture all necessary data from every angle.

Limited for Dynamic Objects

- Scanning-based modeling works best for Static Objects. Capturing dynamic objects or scenes, such as people in motion or moving vehicles, can be difficult. While motion capture systems exist, they are typically separate from traditional 3D scanning methods and can require specialized equipment.

Post-Processing Time

- After capturing the scan, significant Post-Processing is often required to clean up the point clouds, remove noise, and generate high-quality meshes. This step can be time-consuming and requires skilled operators.

Applications of Scanning-Based Modeling

Cultural Heritage Preservation

- Scanning-based modeling is widely used in the Preservation of Historical Sites and Artifacts. By scanning objects such as ancient sculptures, buildings, or murals, detailed 3D models can be created for documentation, restoration, and public access. For example, LiDAR scanning has been used to digitally preserve ancient ruins like those in Petra and Pompeii.

Architecture and Construction

- In architecture, scanning-based modeling is used for Building Information Modeling (BIM), where real-world structures are scanned and turned into 3D models for design, planning, and construction. This is especially useful for Renovations or Site Surveys, where existing structures must be precisely replicated.

- LiDAR scanning is also used for Terrain Modeling in construction, allowing engineers to map out accurate topography for large-scale projects like highways, bridges, or urban planning.

Virtual and Augmented Reality (VR/AR)

- VR and AR applications benefit from scanning-based modeling because the resulting 3D models can be used to create interactive environments or objects that users can manipulate or explore in real time. For instance, scanning an archaeological site and then viewing it in AR allows users to "walk through" historical locations.

Gaming and Film Production

- In game and film productions, 3D models of real-world objects or locations are often needed to create realistic environments. Scanning-based modeling provides a way to capture the intricate details of props, landscapes, and characters. For example, CGI artists use photogrammetry to create 3D models of real-world environments for special effects and animated scenes.

Medical and Forensic Imaging

- In Forensic Science, scanning-based modeling can be used to recreate crime scenes in 3D for analysis. Similarly, in Medical Applications, scanning techniques are used to create 3D models of organs, bones, or even entire body scans (such as CT scans) for diagnosis, surgery planning, or custom prosthetics design.

E-Commerce

- E-commerce websites can use scanning-based models to create 3D visualizations of products, allowing customers to view items from all angles before purchase. For example, furniture stores often provide 3D models of their products to be viewed in the buyer’s home using AR apps.

Choosing Between AI-Generated and 3D Scanning Models: When to Use Each Approach

AI-Generated 3D Models

- Ideal for scenarios where physical samples are lacking, or when rapid concept design or prototyping is required. It is also suitable for creating creative, stylized models in gaming and virtual reality. AI generation offers high efficiency and creativity, making it well-suited for large-scale production and early-stage design.

3D Scanning Models

- Best suited for scenarios demanding high precision and realistic reproduction, such as cultural heritage preservation, architectural surveying, medical imaging, industrial design, or game development. Scanning methods accurately capture real-world details, ensuring high fidelity and realism—especially for game scenes, characters, or objects that require authentic representation.

3D Scanning App KIRI Engine

KIRI Engine is a powerful 3D scanning app that specializes in converting 2D images into high-quality 3D models. Here is an overview of its key features from the perspective of scanning 3D models from 2D images.

Key Features of KIRI Engine

Photogrammetry-Based Scanning

- KIRI Engine utilizes advanced photogrammetry technology, allowing users to take multiple 2D images of an object from different angles. The app processes these images to accurately reconstruct the object into a detailed 3D model.

High-Quality 3D Model Creation

- The app can generate 3D models with precise geometry and realistic textures, ensuring the final output captures fine details of the original object.

User-Friendly Interface

- Designed for both beginners and professionals, KIRI Engine offers an intuitive interface that simplifies the 3D scanning process. Users can easily upload images and generate models without needing advanced technical skills.

Cross-Platform Export

- The generated 3D models can be exported in various formats such as OBJ, FBX, STL, GLB, GLTF, USDZ, PLY, and XYZ, making them compatible with popular 3D modeling software like Blender, Unity, and Unreal Engine.

Cloud Processing

- KIRI Engine offers cloud-based processing, reducing the burden on local devices and ensuring faster and more efficient model generation.

Free and Accessible

- KIRI Engine provides a free version that allows users to create high-quality 3D models, making it accessible for hobbyists, artists, and professionals alike. You can Download it and use it to create your 3D model free of charge.

Four Scanning Modes for the KIRI Engine

KIRI Engine is a versatile 3D scanning app that supports four advanced scanning modes to accommodate different object types and scanning needs. Here is an overview of each method.

Photo Scan

Utilizes photogrammetry technology by capturing multiple 2D images from various angles. The app processes these images to reconstruct detailed and textured 3D models. Ideal for everyday objects, artworks, and product prototypes where texture and surface detail are important.

LiDAR Scan

Leverages LiDAR (Light Detection and Ranging) technology available on certain smartphones and devices. Captures depth information to generate precise point clouds and highly accurate 3D models. Best suited for scanning large environments or complex surfaces with intricate geometry.

Featureless Object Scan

Specifically designed to scan objects with minimal surface details—like smooth, reflective, or single-colored items. Utilizes advanced algorithms to detect subtle geometrical cues, overcoming the typical challenges of photogrammetry. Useful for scanning objects such as ceramics, glass, or metallic surfaces.

3D Gaussian Splatting (3DGS) Scan

An emerging technique that uses Gaussian splatting for efficient real-time rendering and high-fidelity reconstruction. Allows for fast generation of realistic 3D models with smooth viewpoint transitions. Ideal for dynamic 3D visualizations, gaming assets, and virtual reality content.

3DGS to Mesh Conversion

KIRI Engine includes a 3DGS to Mesh feature, allowing users to Convert Gaussian Splatting (3DGS) Models into Standard Mesh Formats. This makes it easier to integrate 3DGS-generated models into conventional 3D workflows, enabling more flexibility for editing, texturing, and rendering. With the innate ability to capture wholistic scenes and difficult objects, the mesh conversion feature opens up many opportunities as it's no longer constrained by traditional limits. Moreover, the conversion process ensures the model maintains high visual fidelity while becoming compatible with various 3D platforms.

Free Blender Plugin

Recognizing that platforms like Blender do not natively support direct viewing of 3DGS files, KIRI Engine offers a dedicated free Plugin for Blender. This plugin allows creators to Import and View 3DGS models seamlessly within Blender, bridging the compatibility gap. It also supports further editing and rendering of converted mesh models, enhancing workflow efficiency for Blender users.

How to use KIRI Engine to scan 2D images into 3D models

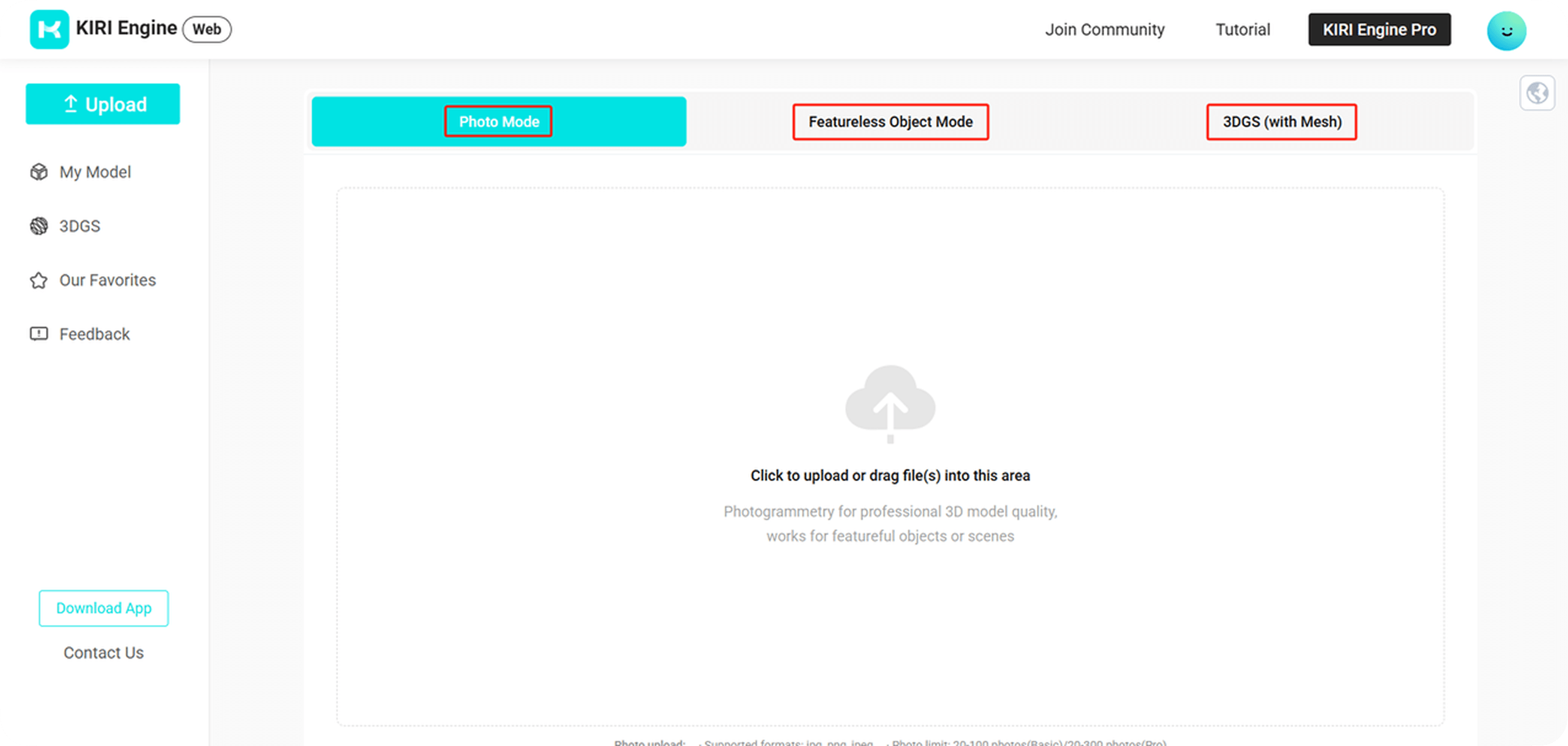

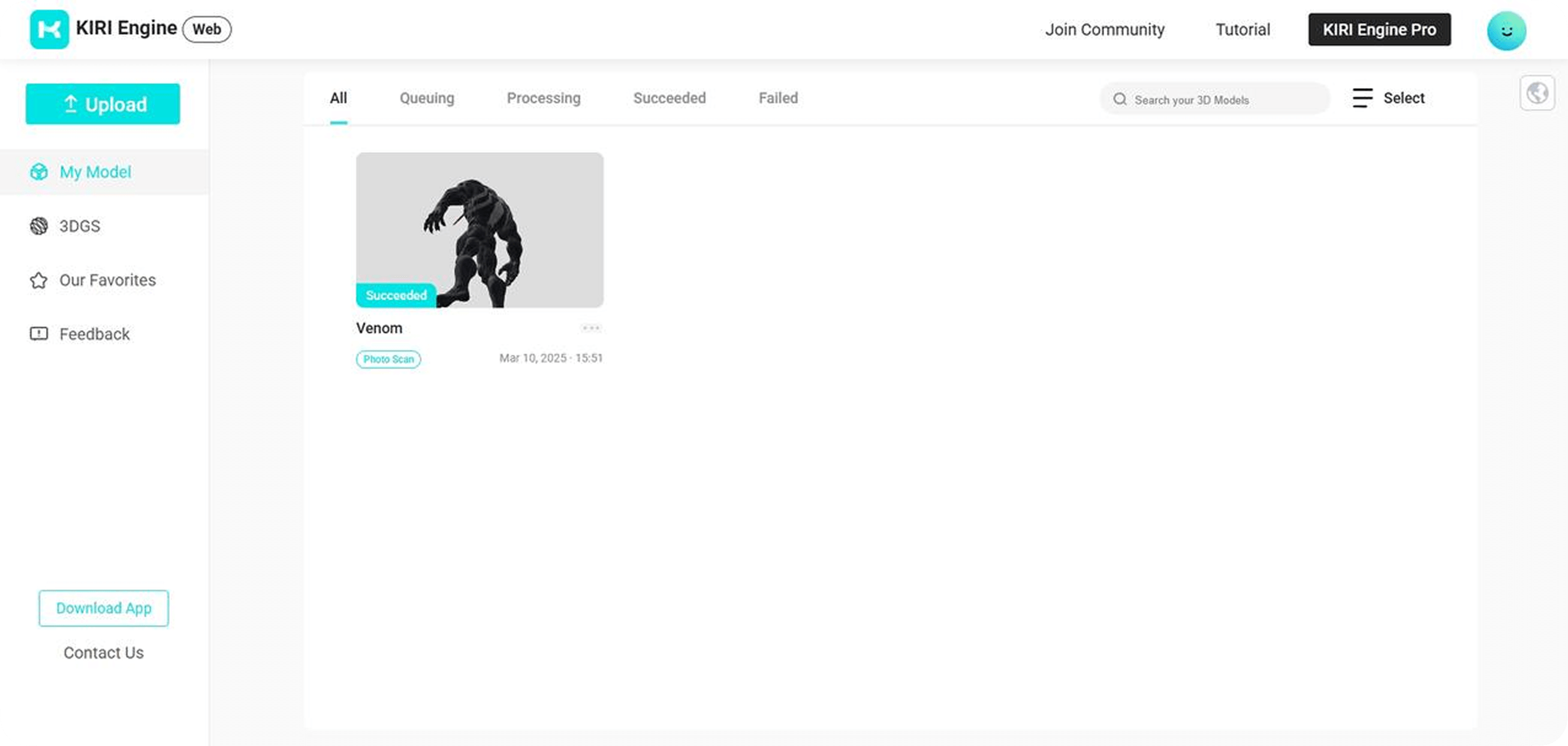

1. Open the Web Version of KIRI Engine and Log into Your Account

Or you can Download Our APP

2. Select the Scanning Mode

Select the scanning mode you want to use to generate a 3D model: Photo Mode, Featureless Object Mode, or 3DGS (With Mesh). Each scanning mode has its own differences and characteristics, so you need to choose according to your needs.

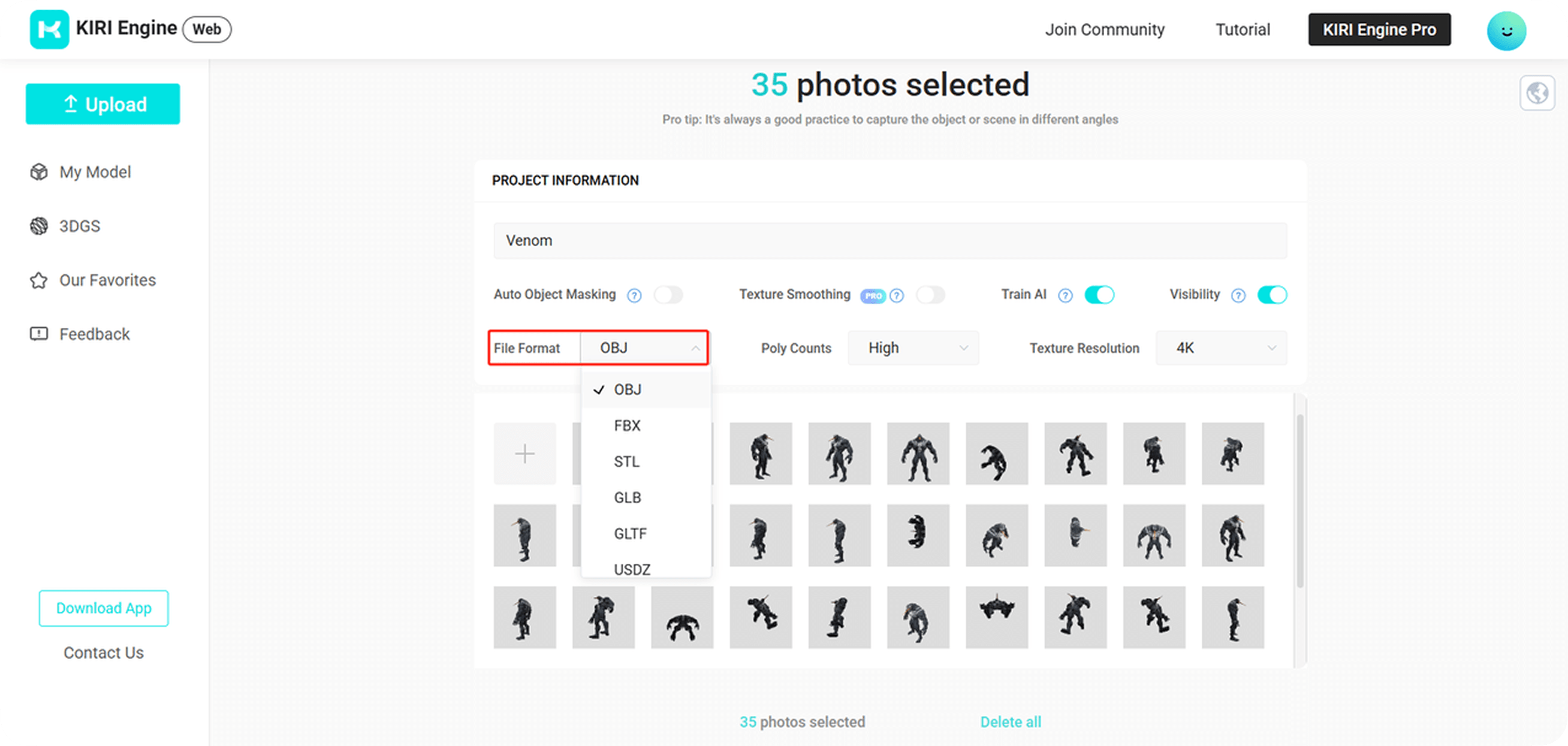

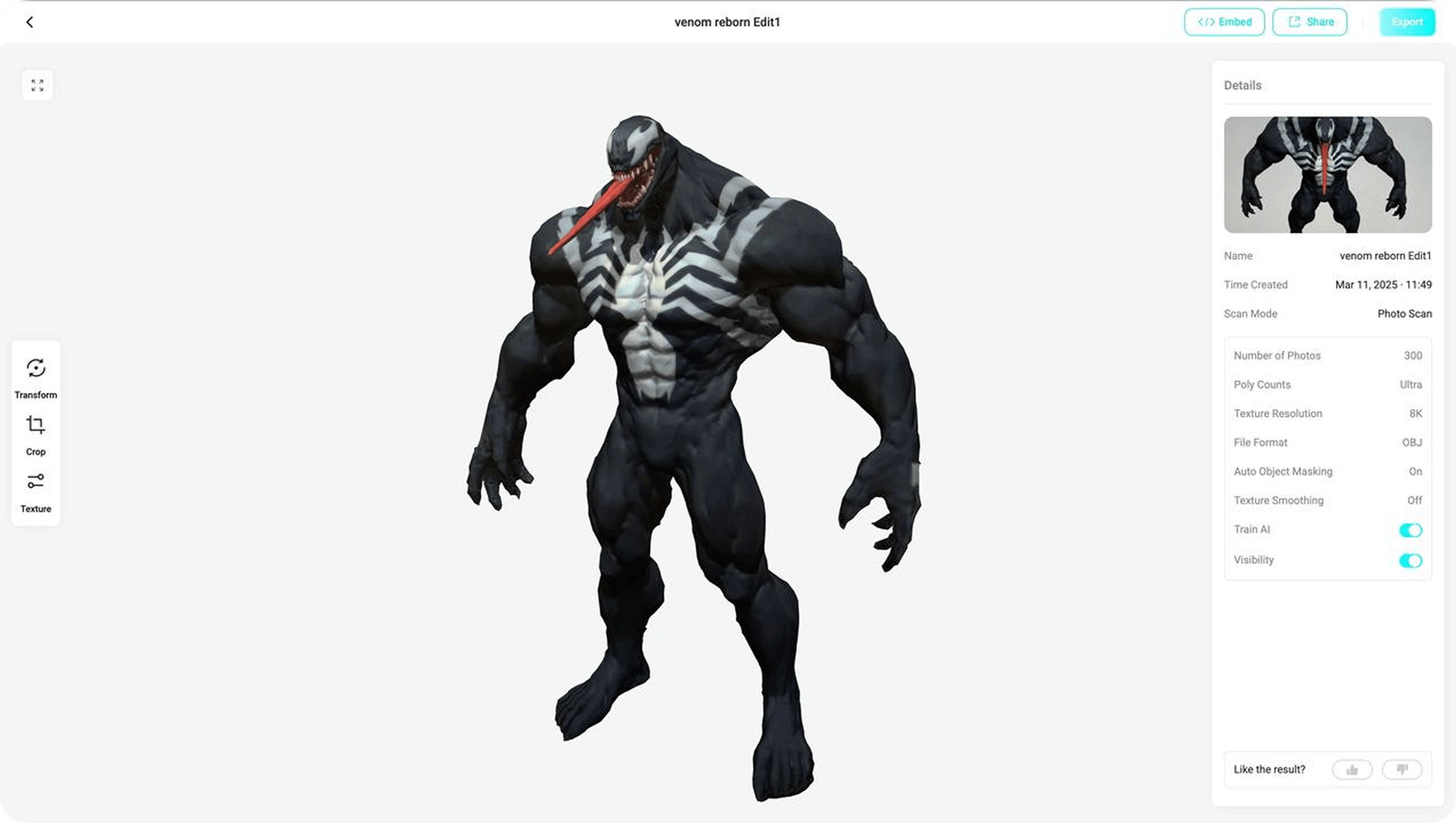

3. Upload The Images and Set the Corresponding Model Parameters

Output File Formats: Choose between OBJ, FBX, STL, GLB, GLTF, USDZ, PLY, and XYZ, and ensure the appropriate compatibility with tools like Blender, Unreal Engine, and Autodesk Maya.

Model Face Count: Adjust the polygon density (mesh resolution) to balance detail and performance for extended applications.

Texture Resolution: Choose between 1K, 2K, 4K, and 8K textures for the level of surface details. We recommend turning off Texture Smoothing if you have taken sharp photos all around, as it aims to reconstruct a consistent texture file at the potential cost of reduced sharpness.

Auto Object Masking: Automatically isolates the subject from the background, ensuring clean, noise-free models by removing extraneous elements. We turned this feature ON because of the complex angles and the amount of close-ups present throughout the capture.

Visibility: This toggle grants the KIRI developers model usage consent: opt to share it publicly under a CC4.0 license (attributing your username).

4. Wait for KIRI Engine to Scan the Images and Successfully Create Your 3D Model

If you have any questions, you can reach out to us at contact@kiri-innov.com, or check out our Youtube Channel where we regularly update quick guides and unique insights!

In the End

The advancement of technology has allowed us to bridge the gap from 2D to 3D. Everything, whether flat or dimensional, can now be presented in a three-dimensional form before our eyes. Converting a 2D image to a 3D model now takes just a few minutes. Using KIRI Engine to scan and create your 3D model is incredibly simple, right? There's no need to be limited by expensive 3D scanning equipment—you can scan and generate your 3D model anytime and anywhere. I believe that in the near future, we will be able to fully digitize the entire world. The plot of the movie Ready Player One might actually become a reality, and this is just the first step.